Appendix - Controls

Data Quality Controls template

You can download the template here

Data Quality dimensions

Definition of dimensions:/home/admin1/sphinx/docs/source/dqf/dqf-resource-center/5-controls/dqf-resource-center-controls.rst

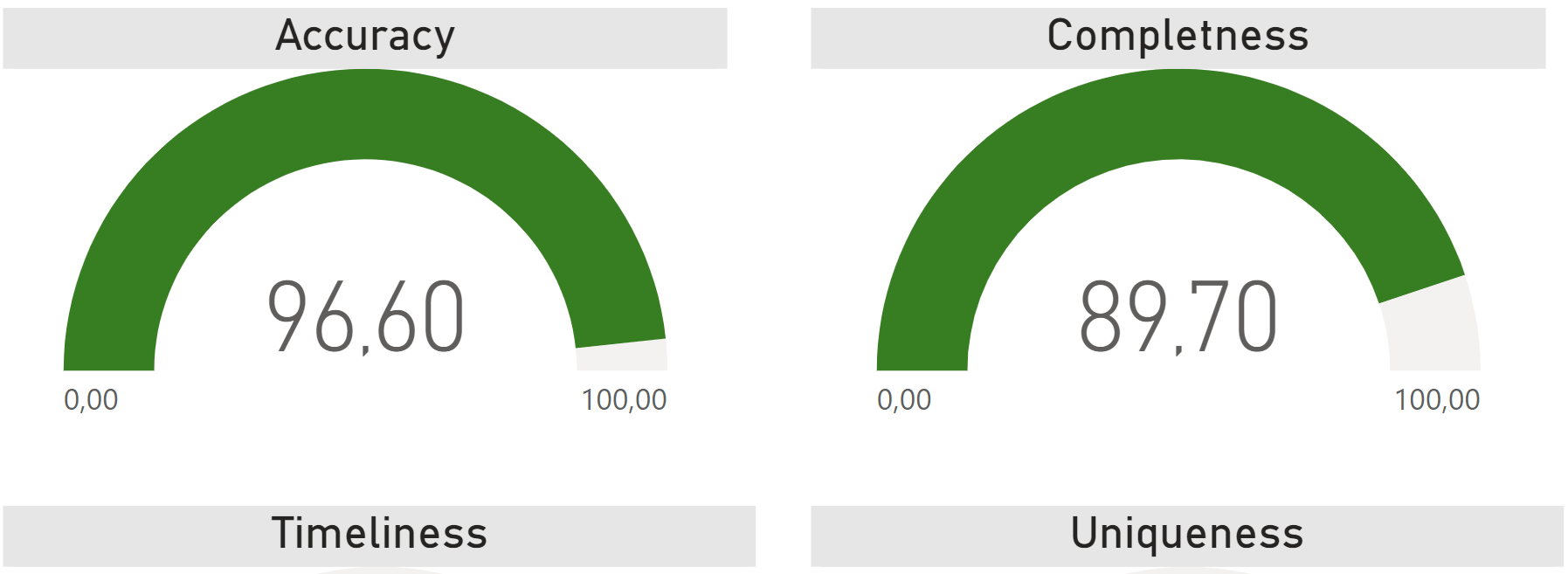

Accuracy:

Accuracy refers to the closeness of data values to a standard or true value. High accuracy means the data correctly represents the real-world scenario it is supposed to model. Controls for accuracy include validation against known values or external verification. It’s a measure in which the value of the data is ‘reflecting reality’ (e.g. without errors, omissions). Most measures of accuracy rely on comparison to a data source that has been verified as accurate (e.g. curated system of reference/MDM, trusted market data).

Accuracy Example: All records in the Customer table must have accurate CustomerName, CustomerBirthdate, and CustomerAdress fields when compared to the tax form.

Completeness:

Completeness measures whether all required data is present in the dataset. It checks for missing data or incomplete records. Completeness controls ensure that necessary data fields are filled and relevant records are not omitted. It’s a measure of the presence of expected data. Does the data contain all the expected information ? Are fields populated according to the fact that they are mandatory, useful, optional?

Completeness example: All records must have a value populated in the CustomerName field.

Integrity:

Data integrity refers to the correctness and reliability of data throughout its lifecycle. It maintains and assures the accuracy and consistency of data over its entire life-cycle. Integrity controls include enforcing data relationships and business rules to prevent corruption. It’s a measure of the fact that the data complies to technical (expected format, expected reference) and functional (constraints data that has not been changed) that have been specified on the Data.

Consistency:

Consistency ensures that the data is consistent within the dataset and across all data sources. It checks that data does not contradict itself and remains in sync across different systems. Consistency checks involve comparing data entries in different databases to ensure they match. It’s a measure of the degree to which data meets rules for content. Consistency is measured on different levels: record-level consistency & cross-record consistency (expected relationship between one set of attribute values and another attribute), temporal consistency (value evolution at different points in time).

Consistency example: The count of records loaded today must be within +/- 5% of the count of records loaded yesterday.

Consistency example: The count of records loaded today must be within +/- 5% of the count of records loaded yesterday.

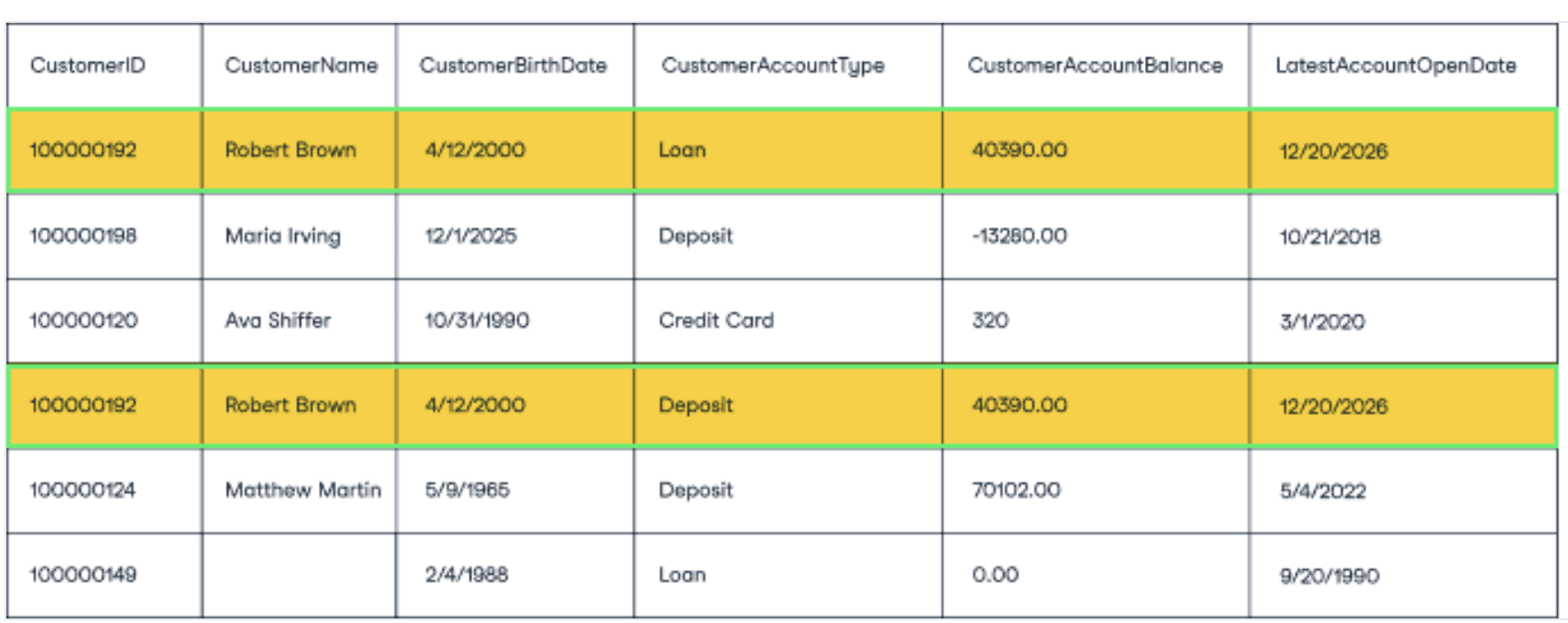

Uniqueness:

Uniqueness means that no data records are duplicated in the dataset. Each record should be unique, identifiable, and not redundant. Controls for uniqueness often involve identifying and removing duplicate entries or records. Uniqueness measures the fact that a given data does not exist more than once in a given context (Information System, application, table, …).

Uniqueness example: All records must have a unique CustomerID and CustomerName.

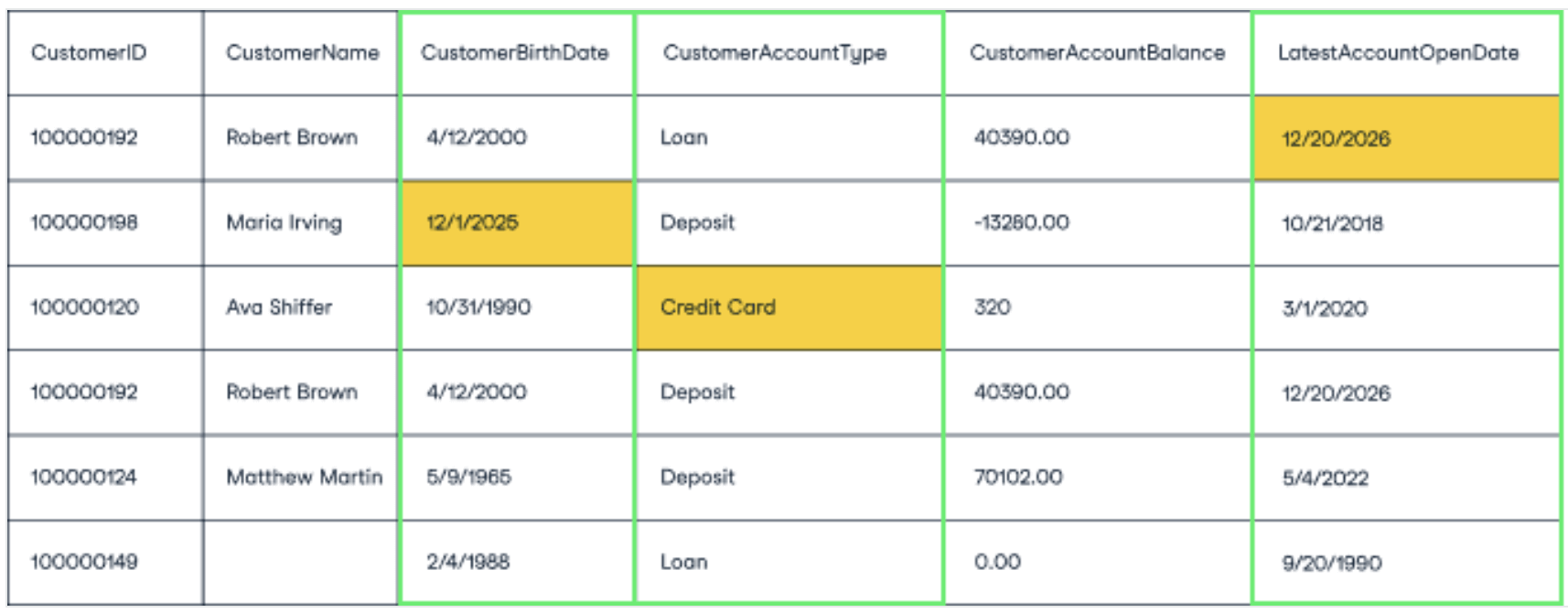

Validity:

Validity measures whether the data follows the specified formats, ranges, and rules defined for it. Valid data must adhere to the expected data model, including correct formats for dates, numbers, and enumerated types. Validity controls include format checks and rule validations. It extends which Data conforms to the syntax (value range, value type, …) of its definition. Validity is verified against a defined set of reference values (e.g. using reference data) and a defined domain of values (e.g. data type, format, and precision).

- Validity example:

CustomerBirthDate value must be a date in the past.

CustomerAccountType value must be either Loan or Deposit.

LatestAccountOpenDate value must be a date in the past.

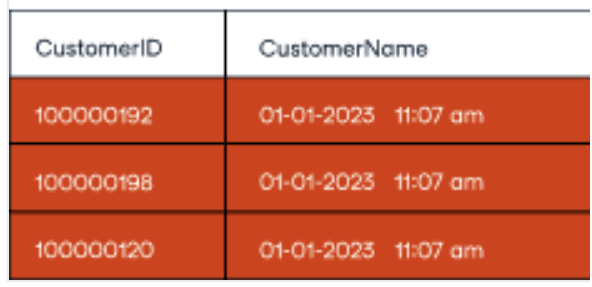

Timeliness:

Timeliness refers to the data being up-to-date and available when needed. It means the data is sufficiently current for the task at hand. Controls for timeliness involve checking the data timestamps and ensuring the data is refreshed or updated at appropriate intervals. Timeliness measures the “freshness” of the Data by analyzing the date of last update compared to the lifecycle of the given Data. Timeliness expectations are based on the level of volatility of Data.

Timeliness example: All records in the customer dataset must be loaded by the 9:00 am.

There are other possible dimensions:

Relevancy:

Measures whether data is appropriate and applicable to the contexts in which it is used. It verifies that data answers business questions and is aligned with current objectives. Analyzing user queries and data access logs can help assess relevance.

Reasonability:

Evaluating the logic and credibility of data values within their specific context. Comparing data with statistical models or standards to identify values that are implausible or deviate significantly from expected trends.

Objectivity:

Degree to which data is free from bias and accurately represents the reality it is intended to model. Analysis of the origin of data and collection processes to ensure that there is no systematic or subjective bias that could influence the results.

Clarity:

Clarity of data presentation, including simplicity of language, structure and explanation of data to facilitate understanding. Review metadata, legends, and explanations associated with datasets to ensure users can unambiguously understand the data.

Availability:

Ease and reliability of access to data when necessary by authorized users. Verification of authentication systems and security protocols to ensure data is available to authorized users while protecting against unauthorized access.

Understandability:

Extent to which data is easily interpretable and understandable by users without the need for additional training or in-depth technical knowledge. Assessing the training and skills of users in relation to the data they use, and adjusting the presentation of the data to improve understandability.

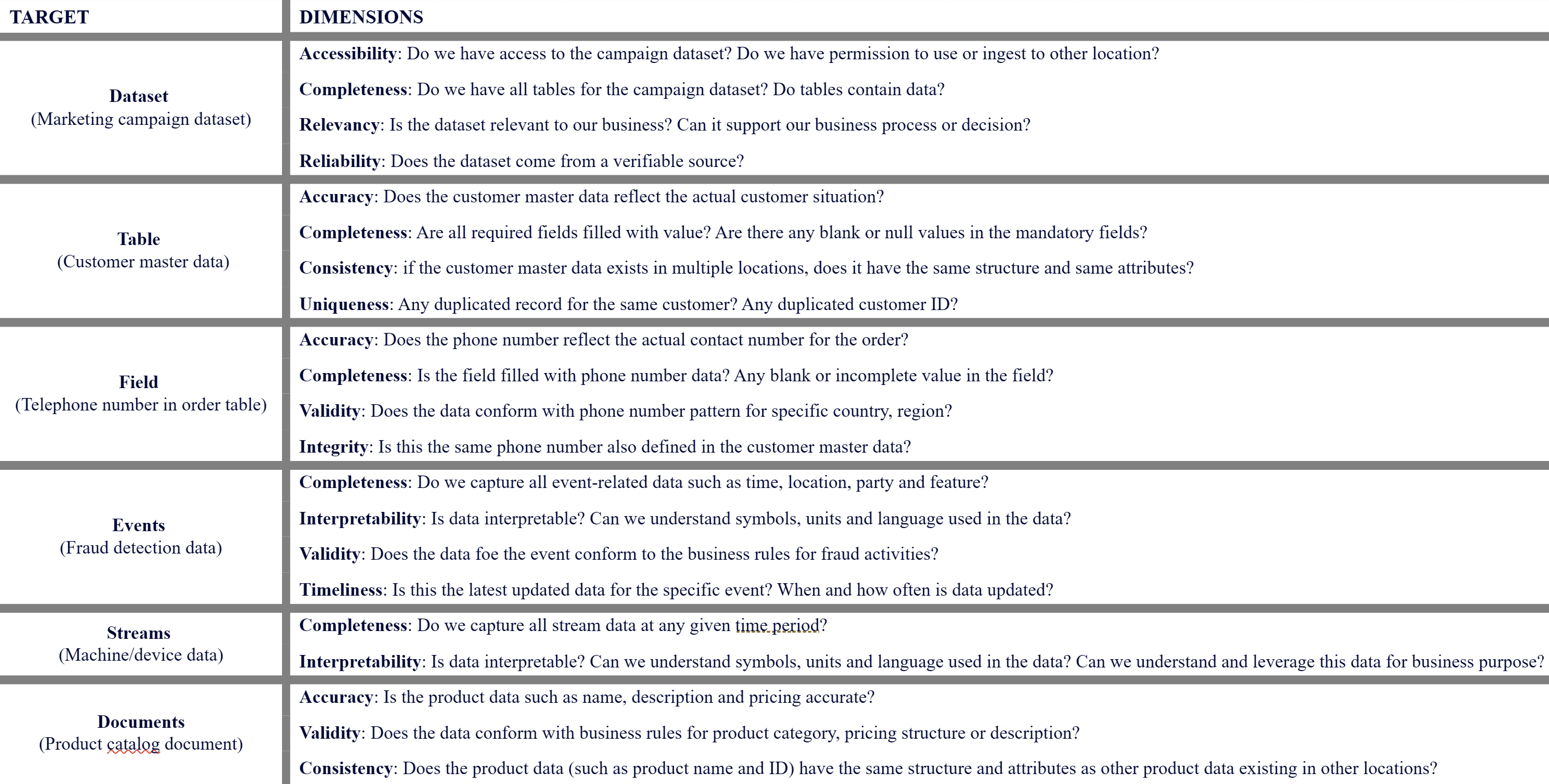

These different dimensions serve different targets and different areas or sectors of activity. Below you will find a non-exhaustive list of sectors concerned for a focus on data quality:

Data Quality thresholds

Target on which apply controls

Threshold is a predefined cutoff value that serves as a reference point for evaluating data quality performance indicators. When data exceeds or does not meet this threshold, it may trigger an alert or corrective action, indicating that an investigation or intervention is necessary to resolve the identified data quality issues. Thresholds are crucial for automated data quality monitoring and proactive data management.

Thresholds enable continuous, automated monitoring of data quality, facilitating early detection of issues before they impact business processes or decision-making. By setting thresholds for different data quality indicators, organizations prioritize issues that require immediate attention, thereby optimizing the use of resources for data maintenance. It helps standardize quality criteria across the organization, ensuring that all departments use a consistent approach to assessing data quality. By monitoring performance against defined thresholds, organizations identify trends and areas for improvement, supporting a cycle of continuous improvement in data quality.

Thresholds are a vital tool for monitoring, managing and improving data quality. They enable organizations to remain responsive to data quality issues, supporting data integrity, compliance, and overall effectiveness.

Data must meet strict standards of quality and integrity. Thresholds help ensure compliance by quickly identifying deviations from regulatory requirements. High-quality data is essential for the efficient operation of business processes.

Thresholds help maintain this state by flagging low-quality data that could hinder operations. For data-driven decisions to be reliable, the underlying data must be of high quality.

Thresholds play a key role in ensuring this quality, highlighting data that is not reliable enough to support critical decisions. Data Quality directly impacts the customer experience, from personalized recommendations to the accuracy of contact information. Thresholds help ensure that the data that supports the customer experience meets high quality standards.

Threshold types

- Threshold can be set in absolute or relative value.

The absolute value: exact number of values respecting the conditions of the Data Quality Control.

The relative value: ratio of values respecting the conditions of the Data Quality Control versus a defined reference.